- Review

- Open access

- Published:

Indoor navigation: state of the art and future trends

Satellite Navigation volume 2, Article number: 7 (2021)

Abstract

This paper reviews the state of the art and future trends of indoor Positioning, Localization, and Navigation (PLAN). It covers the requirements, the main players, sensors, and techniques for indoor PLAN. Other than the navigation sensors such as Inertial Navigation System (INS) and Global Navigation Satellite System (GNSS), the environmental-perception sensors such as High-Definition map (HD map), Light Detection and Ranging (LiDAR), camera, the fifth generation of mobile network communication technology (5G), and Internet-of-Things (IoT) signals are becoming important aiding sensors for PLAN. The PLAN systems are expected to be more intelligent and robust under the emergence of more advanced sensors, multi-platform/multi-device/multi-sensor information fusion, self-learning systems, and the integration with artificial intelligence, 5G, IoT, and edge/fog computing.

Introduction

The Positioning, Localization, and Navigation (PLAN) technology has been widely studied and successfully commercialized in many applications such as mobile phones and unmanned systems. In particular, indoor PLAN technology is becoming increasingly important with the emergence of new chip-level Micro-Electromechanical System (MEMS) sensors, positioning big data, and Artificial Intelligence (AI) technology, as well as the increase of public interest and social potential.

The market value of indoor navigation: social benefits and economic value

The global indoor PLAN market is expected to reach $ 28.2 billion by 2024, growing at a Compound Annual Growth Rate (CAGR) of 38.2% (Goldstein 2019). Indoor PLAN has attracted the attention of not only consumer giants such as Apple and Google but also self-driving players such as Tesla and Nvidia. This is because the emerging vehicle applications (e.g., autonomous driving and connected vehicles) need indoor-PLAN capability. Compared with traditional vehicles, unmanned vehicles face three important problems: PLAN, environmental perception, and decision-making. A vehicle needs to PLAN itself into the surrounding environment before making decisions. Therefore, only by solving the indoor PLAN can fully autonomous driving and location services be achieved.

Social benefits Accurate PLAN can serve safety and medical applications and benefit special groups such as the elderly, children, and the disabled. Meanwhile, PLAN technology can bring a series of location services, such as Mobility as a Service (MaaS), which increases travel convenience and security, and reduces carbon emission (through changing owned vehicles to shared ones). Also, reliable PLAN technology can reduce road accidences, 94% of which are caused by human errors (Singh 2015).

Economic values As a demander of indoor PLAN, autonomous driving technology is expected to reduce the ratio of owned to shared vehicles to 1:1 by 2030 (Schönenberger 2019). By 2050, autonomous cars will be expected to bring savings of 800 billion dollars annually by reducing congestion, accidents, energy consumption, and time consumption (Schönenberger 2019). The huge social and economic benefits promote the demand for PLAN technology facing the autonomous driving and mass consumer markets.

Classification of indoor navigation from market perspective

PLAN technology is highly related to market demand. Table 1 shows the accuracy requirements and costs of several typical indoor PLAN applications.

In general, for the applications that require higher accuracy, the facilities and equipment costs are correspondingly higher. In many scenarios (e.g., the mass-market ones), the minimum equipment installation cost and equipment cost are important factors that limit the scalability of PLAN technology.

Industry and construction require the PLAN accuracy at the centimeter- or even millimeter-level. For example, the accuracy requirements for machine guidance and deformation analysis are 1–5 cm and 1–5 mm, respectively. The corresponding cost is in the $ 10,000 level (Schneider 2010).

Compared with industry and construction, the PLAN accuracy requirements for autonomous driving are lower. However, the application scene is much larger and has more complex changes; also, the cost is more restrictive. Such factors increase the challenge of PLAN in autonomous driving. The Society of Automotive Engineers divides autonomous driving into L0 (no automation), L1 (driver assistance), L2 (partial automation), L3 (conditional automation, which requires drivers to be ready to take over when the vehicle has an emergency alert), L4 (high automation, which does not require any user intervention but is only limited to specific operational design domains, such as areas with specific facilities and High-Definition maps (HD maps), and L5 (fully automation) (SAE-International 2016). In most situations, autonomous cars mean L3 and above. There is still a certain distance from L5 commercial use (Wolcott and Eustice 2014). An important bottleneck is that PLAN technology is difficult to meet the requirements in the entire environment.

There are various derivations and definitions of the accuracy requirement of autonomous driving. Table 2 lists several of those derivations and definitions.

The research work (Basnayake et al. 2010) shows the accuracy requirements in Vehicle-to-Everything (V2X) applications for which-road (within 5 m), which-lane (within 1.5 m), and where-in-lane (within 1.0 m). The National Highway Safety Administration (NHTSA 2017) reports a requirement of 1.5 m (1 sigma, 68% probability) tentatively for lane-level information for safety applications. The research work (Reid et al. 2019) derives an accuracy requirement based on road geometry standards and vehicle dimensions. For passenger vehicle operating, the bounds of lateral and longitudinal position errors are respectively 0.57 m (95% probability in 0.20 m) and 1.40 m (95% probability in 0.48 m) on freeway roads, and both 0.29 m (95% probability in 0.10 m) on local streets. In contrast, the research work (Levinson and Thrun 2010) believes that centimeter positioning accuracy (with a Root Mean Square (RMS) error of within 10 cm) is sufficient for public roads, while the report (Agency 2019) defines the accuracy for autonomous driving to be within 20 cm in horizontal and within 2 m in height. Meanwhile, the research work (Stephenson 2016) reports that active vehicle control in ADAS and autonomous driving applications require an accuracy better than 0.1 m. Beyond research, the goal for autonomous driving is set at the centimeter-level by many autonomous-driving companies (e.g., (Nvidia 2020)). To summarize, autonomous driving requires the PLAN accuracy at decimeter-level to centimeter-level. The current cost is in the order of $ 1000 to $ 10,000 (when using three-Dimensional (3D) Light Detection and Ranging (LiDAR)).

For indoor mapping, the review paper (Cadena et al. 2016) shows that the accuracy within 10 cm is sufficient for two-Dimensional (2D) Simultaneous Localization and Mapping (SLAM). Indoor mapping is commonly conducted with a vehicle that moves slower in a smaller area when compared with autonomous driving. The cost of a short-range 2D LiDAR for indoor mapping is in the order of $ 1000.

The research work (Rantakokko et al. 2010) illustrates that first responders require indoor PLAN accuracy of 1 m in horizontal and within 2 m in height. The cost for first responders is at the $ 1,000-level.

For mass-market applications, it is difficult to find a standard of PLAN accuracy requirement. An accepted accuracy classification is that 1–5 m is high, 6–10 m is moderate, and over 11 m is low (Dodge 2013). The vertical accuracy requirement is commonly on the floor-level. For such applications, it is important to use existing consumer equipment and reduce base station deployment costs. On average, the deployment in a 100 m2-level area costs approximately $ 10-level. The E-911 cellular emergency system uses cellular signals and has an accuracy requirement of 80% for an error of 50 m (FCC 2015).

The cost of indoor PLAN applications depends on the sensors used. The main sensors and solutions will be introduced in the following section.

Main players of indoor navigation

Various researchers and manufacturers investigate indoor PLAN problems from different perspectives.

Table 3 lists the selected research works that can reflect the typical navigation accuracy for different sensors, while Table 4 shows the selected players from the industrial. The primary sensor, reported accuracy, and sensor costs are covered.

The actual PLAN performance is related to the factors such as infrastructure deployment (e.g., sensor type and deployment density), sensor grade, environment factors (e.g., the significance of features and area size), and vehicle dynamics.

In general, different types of sensors have various principles, measurement types, PLAN algorithms, performances, and costs. It is important to select the proper sensor and PLAN solution according to requirements.

State of the art

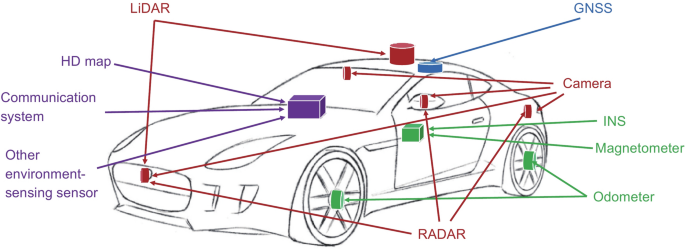

To achieve an accurate and robust PLAN for autonomous vehicles, multiple types of sensors and techniques are required. Figure 1 shows part of the PLAN sensors that have been in autonomous cars. This section summarizes the state-of-the-art sensors and PLAN techniques.

Sensors for indoor navigation

The sensors include environmental monitoring and awareness sensors (e.g., HD map, LiDAR, RAdio Detection and Ranging (RADAR), camera, WiFi/BLE, 5G, and Low-Power Wide-Area Network (LPWAN)), and the navigation sensors (e.g., Inertial Navigation Systems (INS) and GNSS). The advantages and challenges for each sensor are also introduced and compared.

Environmental monitoring and awareness sensors (aiding sensors for navigation system)

HD maps

Car-mounted road maps have been successfully commercialized since the beginning of this century. Also, companies such as Google and HERE have launched indoor maps for public places. These maps contain roads, buildings, and Point-of-Interest (POI) information and commonly have meter-level to decimeter-level accuracy. The main purpose of these maps is to assist people to navigate and perform location service applications. The main approaches for generating these maps are satellite imagery, land-based mobile mapping, and onboard GNSS crowdsourcing.

In the past decade, HD maps have received extensive attention. An important reason is that traditional maps are designed for people, not machines. Therefore, the accuracy of the traditional map cannot meet the requirements of autonomous driving. Also, the traditional map does not contain enough real-time information for autonomous driving, which requires not only information about the vehicle, but also information about external facilities (Seif and Hu 2016). With these features, the HD map is not only a map but also a "sensor" for PLAN and environment perception. Table 5 compares the traditional map and HD map.

HD map is key to autonomous driving. It is generally accepted that HD maps require centimeter-level accuracy and ultra-high (centimeter-level or higher) resolution. Accordingly, creating HD maps is a challenge. The creation and updating of the current HD maps are dependent on professional vehicles equipped with high-end LiDAR, cameras, RADARs, GNSS, and INS. For example, Baidu spent 5 days building an HD map in a Beijing park by using million-dollar-level mapping vehicles (Synced 2018). Such a generation method is costly; also, it is difficult to update an HD map continuously.

To mitigate the updating issue, crowdsourcing based on car-mounted cameras has been researched. This method can lower the requirement of extra data collection if the images from millions of cars are used properly. However, this task is extremely challenging. First, it is difficult to obtain the PLAN solutions that are accurate enough for HD map updating with crowdsource data. Furthermore, to update the HD map in an area effectively where changes have occurred, there are challenges in transmitting, organizing, and processing massive crowdsourced data. For example, one hour of autonomous driving may collect one terabyte of data (Seif and Hu 2016). It takes 230 days to transfer one week’s autonomous driving data using WiFi (MachineDesign 2020). Thus, dedicated onboard computing chips, high-efficiency communication, and edge computing are needed. Therefore, crowdsourcing HD maps requires cooperation from car manufacturers, map manufacturers, 5G manufacturers, and terminal manufacturers (Abuelsamid 2017).

LiDAR

LiDAR systems use laser light waves to measure distances and generate point clouds (i.e., a set of 3D points). The distance is computed by measuring the time of flight of a light pulse, while the direction of a transmitted laser is tracked by gyros. By matching the measured point cloud with that stored in a database, an object can be located.

LiDAR is an important PLAN sensor on unmanned vehicles and robots. Figure 2 compares the PLAN-related performance of the camera, LiDAR, and RADAR.

The main advantages are its high accuracy and data density. For example, the Velodyne HDL-64E LiDAR has a measurement range of over 120 m, with ranging accuracy of 1.5 cm (1 sigma) (Glennie and Lichti 2010). The observation can cover 360° horizontally, with up to 2.2 million points per second (Velodyne 2020). Such features make LiDAR a strong candidate in providing high-definition surrounding environment information.

The main challenges of using LiDAR are the high price and large size. Also, the current LiDAR system has a rotation mechanism on the top of the carrier, which may have a problem in its life span. Some manufacturers try to use solid-state LiDAR to alleviate these problems. Apple unveils a new iPad Pro with a LiDAR scanner, which may bring new directions to indoor PLAN.

LiDAR measurements are used for PLAN through 2D or 3D matching. For example, the research works (de Paula Veronese et al. 2016) and (Wolcott and Eustice 2017) match LiDAR measurements with a 2D grid map and a 3D point cloud map, respectively. The PLAN performance is generally better when the surrounding environment features are significant and distinct from other places; otherwise, performance is limited. The LiDAR measurement performance will not be affected by light but may be affected by weather conditions.

Camera

Cameras are used for PLAN and perception by collecting and analyzing images. Compared with LiDAR and RADAR, the camera has a much lower cost. Also, the camera has the advantages such as rich feature information and color information. Also, the camera is a passive sensing technology, which does not transmit signals and thus does not have errors on the signal-propagation side. Moreover, the current 2D computer vision algorithm is more advanced, which has also promoted the application of cameras.

Similar to LiDAR, the camera depends on the significance of environmental features. Also, the camera is more susceptible to weather and illumination conditions. Its performance degrades under harsher conditions, such as in darkness, rain, fog, and snow. Thus, it is important to develop camera sensors with self-cleaning, longer dynamic range, better low light sensitivity, and higher near-infrared sensitivity. Furthermore, the amount of raw camera data is large. Multiple cameras on an autonomous vehicle can generate gigabyte-level raw data every minute or even every second.

Some PLAN solutions use cameras, instead of a high-end LiDAR, to reduce hardware cost. An example is Tesla's autopilot system (Tesla 2020). This system contains many cameras, including three forward cameras (wide, main, and narrow), four side cameras (forward and rearward), and a rear camera. To assure the PLAN performance in the environments that are challenging for cameras, RADARs and ultrasonic sensors are used.

The two main camera-based PLAN approaches are visual odometry/SLAM and image matching. For the former, the research work (Mur-Artal and Tardós 2017) can support visual SLAM using monocular, stereo, and Red–Green–Blue-Depth (RGB-D) cameras. For image matching, road markers, signs, poles, and artificial features (e.g., Quick Response (QR) codes) can be used. The research work (Gruyer et al. 2016) uses two cameras to take the ground road marker and match it with a precision road marker map. In contrast, the research works (Wolcott and Eustice 2014) and (McManus et al. 2013) respectively use images from monocular and stereo cameras to match the 3D point cloud map generated by a survey vehicle equipped with 3D LiDAR scanners.

RADAR

RADAR has also received intensive attention in the autonomous driving industry. Similar to LiDAR, the RADAR determines the distance by measuring the round-trip time difference of the signal. The difference is that the RADAR emits radio waves, instead of laser waves. Compared with LiDAR, the RADAR generally has a further measurement range. For example, the Bosch LRR RADA can reach up to 250 m. Also, the price of a RADAR system has dropped to the order of $ 1,000 to $ 100. Moreover, RADAR systems are lightweight, which makes it possible to embed them in cars.

On the other hand, the density of RADAR measurements is much lower than that of LiDARs and cameras. Therefore, RADAR is often used for obstacle avoidance, rather than as the main sensor of PLAN. Similar to LiDAR, the measurement performance of RADAR is not affected by light but may be affected by weather conditions.

WiFi/BLE

WiFi and BLE are the most widely used indoor wireless PLAN technologies for consumer electronics. The commonly used observation is RSS (Zhuang et al. 2016), and the typical positioning accuracy is at meter-level. Also, researchers have extracted high-accuracy measurements, such as CSI (Halperin et al. 2011), RTT (Ciurana et al. 2007), and AoA (Quuppa 2020). Such measurements can be used for decimeter-level or even centimeter-level PLAN.

A major advantage of WiFi systems is that they can use existing communication facilities. In contrast, BLE is flexible and convenient to deploy. To meet the future Internet-of-Things (IoT) and precise localization requirements, new features have been added to both the latest WiFi and BLE technologies. Table 6 lists the new WiFi, BLE, 5G, and LPWAN features that can enhance PLAN. WiFi HaLow (WiFi-Alliance 2020) and Bluetooth long range (Bluetooth 5) (Bluetooth 2017) are released to improve the signal range, while WiFi RTT (IEEE 802.11 mc) (IEEE 2020) and Bluetooth direction finding (Bluetooth 5.1) (Bluetooth 2019) have been released for precision positioning.

5G/LPWAN

5G has attracted intensive attention due to its high speed, high reliability, and low latency in communication. Compared with previous cellular technologies, 5G has defined three application categories (Restrepo 2020), including Ultra-Reliable and Low-Latency Communication (URLLC) for high-reliability (e.g., 99.999% reliable under 500 km/h high-speed motion) and low-latency (e.g., millisecond-level) scenarios (e.g., vehicle networks, industrial control, and telemedicine), enhanced Mobile Broad Band (eMBB) for high-data-rate (e.g., gigabit-per-second-level, with a peak of 10 gigabits-per-second) and strong mobility scenarios (e.g., video, augmented reality, virtual reality, and remote officing), and massive Machine-Type Communication (mMTC) for application scenarios (e.g., intelligent agriculture, logistics, home, city, and environment monitoring) that have massive nodes which have a low cost, low power consumption, and low data rate.

5G has strong potential to change the cellular-based PLAN. First, the coverage range of 5G base stations may be shrunk from kilometers to hundreds of meters or even within 100 m (Andrews et al. 2014). The increase of base stations will enhance the signal geometry and mitigate Non-Line-of-Sight (NLoS) conditions. Second, 5G has new features, including mmWave Multiple-Input and Multiple-Output (MIMO), large-scale antenna, and beamforming. These features make it possible to use multipath signals to enhance PLAN (Witrisal et al. 2016). Third, 5G may introduce device-to-device communication (Zhang et al. 2017a), which makes cooperative PLAN possible.

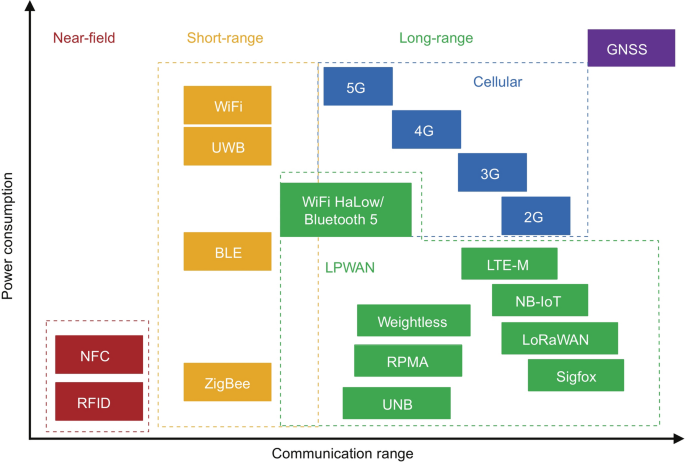

Meanwhile, the newly-emerged IoT signals and the Low-Power Wide-Area Network (LPWAN, e.g., long-range (LoRa), Narrow Band-IoT (NB-IoT), Sigfox, and Long Term Evolution for Machines (LTE-M) have the advantages such as long-range, low-cost, low-power-consumption, and massive connections (Li et al. 2020a). Figure 3 demonstrates the communication ranges of 5G and LPWAN signals, with a comparison with other wireless technologies.

Signal ranges of 5G, LPWAN, and other wireless technologies (Li et al. 2020a)

5G and LPWAN systems provide a possibility for the wide-area localization in indoor and urban areas. Similar to 5G, LPWAN systems no longer require an extra communication module that costs $ 10 level in the current PLAN systems. LPWAN signals are compatible with more and more smart home appliances. These nodes will increase the deployment density of IoT networks and thus enhance PLAN performance. Also, it is feasible to add new measurement types (e.g., TDoA (Leugner et al. 2016) and AoA (Badawy et al. 2014)) into the 5G and LPWAN base stations.

Most of the existing research on 5G and LPWAN based PLAN is based on theoretical analysis and simulation data because there are limited real systems. The standard for mmWave signal has been late and therefore it is difficult to find the hardware for experimenting. The accuracy ranges from 100-m-level to centimeter-level, depending on the base station deployment density and the type of measurement used. The survey paper (Li et al. 2020a) provides a systematic review of 5G and LPWAN standardizations, PLAN techniques, error sources, and mitigation. In particular, it summarizes the PLAN errors by end-device-related errors, environment-related errors, base-station-related errors, and data-related errors. It is important to mitigate these error sources when using 5G and LPWAN signals for PLAN purposes.

There are indoor PLAN solutions based on other types of environmental signals, such as the magnetic (Kok and Solin 2018), acoustic (Wang et al. 2017), air pressure (Li et al. 2018), visible light (Zhuang et al. 2019), and mass flow (Li et al. 2019a).

Navigation and positioning sensors

Inertial navigation system

An INS derives motion states by using angular-rate and linear specific-force measurements from gyros and accelerometers, respectively. The review paper (El-Sheimy and Youssef 2020) summarizes the state of the art and future trends of inertial sensor technologies. INS is traditionally used in professional applications such as military, aerospace, and mobile surveying. Since the 2000s, low-cost MEMS-based inertial sensors were introduced into the PLAN of land vehicles (El-Sheimy and Niu 2007a, b). Since the release of the iPhone 4, MEMS-based inertial sensors have become a standard feature on smartphones and have brought in new applications such as gyro-based gaming and pedestrian indoor PLAN. Table 7 compares a typical inertial sensor performance in mobile mapping and mobile phones. Different grades of inertial sensors have various performances and costs. Thus, it is important to select a proper type of inertial sensors according to application requirements.

The INS can provide autonomous PLAN solutions, which means it does not require the reception of external signals or the interaction with external environments. Such a self-contained characteristic makes it a strong candidate to ensure PLAN continuity and reliability when the performances of other sensors are degraded by environmental factors. An important error source for INS-based PLAN is the existence of sensor errors, which will accumulate and lead to drifts in PLAN solutions. There are deterministic and stochastic sensor errors. The impact of deterministic errors (e.g., biases, scale factor errors, and deterministic thermal drifts) may be mitigated through calibration or on-line estimation (Li et al. 2015). In contrast, stochastic sensor errors are commonly modeled as stochastic processes (e.g., white noises, random walk, and Gaussian–Markov processes) (Maybeck 1982). The statistical parameters of stochastic models can be estimated by the methods such as power spectral density analysis, Allan variance (El-Sheimy et al. 2007), and wavelet variance (Radi et al. 2019).

Global navigation satellite system (as an initializer)

GNSS localizes a receiver using satellite multilateration. It is one of the most widely used and most well-commercialized PLAN technology. Standalone GNSS and GNSS/INS integration are the mainstream PLAN solutions for outdoor applications. In autonomous driving, the GNSS transfers from the primary PLAN sensor to the second core. The main reason is that GNSS signals may be degraded in urban and indoor areas. Even so, high-precision GNSS is still important to provide an initial localization to reduce the searching space and computational load of other sensors (e.g., HD map and LiDAR) (Levinson et al. 2007).

The previous boundaries between high-precision professional and mass-market GNSS uses are blurring. A piece of evidence is the integration between high-precision GNSS techniques and mass-market chips. Also, the latest smartphones are being able to provide high-precision GNSS measurements and PLAN solutions.

Table 8 lists the main GNSS positioning techniques. Single Point Positioning (SPP) and Differential-GNSS (DGNSS) are based on pseudo-range measurements, while Real-Time Kinematic (RTK), Precise Point Positioning (PPP), and PPP with Ambiguity Resolution (PPP-AR) are based on carrier-phase measurements. DGNSS and RTK are relative positioning methods that mitigate some errors by differencing measurements across the rover and base receivers. In contrast, PPP and PPP-AR provide precise positioning at a single receiver by using precise satellite orbit correction, clock correction, and parameter-estimation models. They commonly need minutes for convergence (Trimble 2020).

There are other types of PLAN sensors, such as magnetometer, odometer, UWB, ultrasonic, and pseudolite. In recent years, there appears relatively low-cost UWB and ultrasonic sensors (e.g., (Decawave 2020; Marvelmind 2020). Such sensors typically can provide a decimeter-level ranging accuracy within a distance of 30 m. Also, Apple has built a UWB module into the iPhone 11, which may bring new opportunities for indoor PLAN. To summarize, Table 9 illustrates the principle, advantages, and disadvantages of the existing PLAN sensors.

Techniques and algorithms for indoor navigation

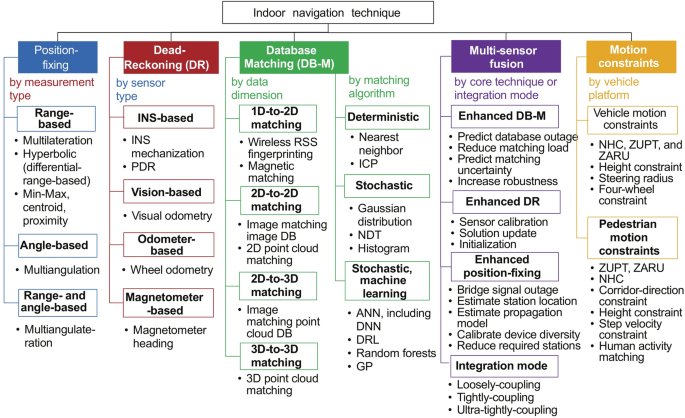

The PLAN techniques include position-fixing, Dead-Reckoning (DR), database matching, multi-sensor fusion, and motion constraints. Figure 4 demonstrates the indoor PLAN techniques. The details are provided in the following subsections.

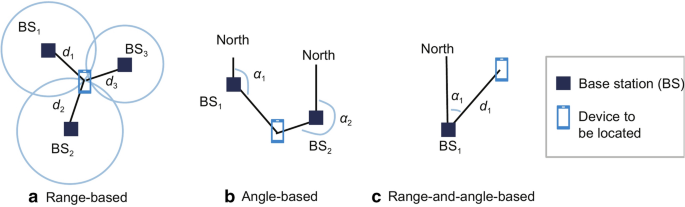

Position-fixing techniques

Geometrical position-fixing methods have been widely applied over the past few decades, especially in the field of satellite positioning and wireless sensor networks. The basic principle is the geometric calculation of distance and angle measurements. By the type of measurement, position-fixing methods include range-based (e.g., multilateration, min–max, centroid, proximity, and hyperbolic positioning), angle-based (e.g., multiangulation), and angle-and-range-based (e.g., multiangulateration). Figure 5 shows the basic principle of these methods.

Range-based methods

The location of a device can be estimated by measuring its distance to at least three base stations (or satellites) whose locations are known. The most typical method is multilateration (Guvenc and Chong 2009), which is geometrically the intersection of multiple spheres (for 3D positioning) or circles (for 2D positioning). Also, the method has several simplified versions. For example, the min–max method (Will et al. 2012) computes the intersection of multiple cubes or squares, while the centroid method (Pivato et al. 2011) calculates the weighted average of multiple base station locations. Moreover, the proximity method (Bshara et al. 2011) is a further simplification by using the location of the closest base station. Meanwhile, the differences of device-base-station ranges can be used to mitigate the influence of device diversity and some signal-propagation errors (Kaune et al. 2011).

For position-fixing, the base station location is usually set manually or estimated using base-station localization approaches (Cheng et al. 2005). The distances between the device and the base stations are modeled as Path-Loss Models (PLMs) and parameters are estimated (Li 2006). To achieve accurate ranging, it is important to mitigate the influence of error sources (e.g., ionospheric errors, troposphere errors, wall effects, and human body effects). In addition, it is necessary to reduce the influence of end-device factors (e.g., device diversity).

The research work (Petovello 2003) describes the range-based PLAN algorithm and its quality control. Meanwhile, the research work (Langley 1999) proposes an index (i.e., the dilution of precision) for the evaluation of signal geometry. A strong geometry is a necessary condition, instead of a necessary and sufficient condition, for accurate range-based localization because there are other error sources, such as the stochastic ones.

Angle-based methods

Triangulation, a typical AoA based PLAN method, computes the device location by using the direction measurements to multiple base stations that have known locations (Bai et al. 2008). When direction measurement uncertainty is considered, the direction measurement from two base stations will intersect to a quadrilateral. The research work (Wang and Ho 2015) provides a theoretical derivation and performance analysis of the triangulation method.

Angle-based PLAN solution can typically provide high accuracy (e.g., decimeter-level) in a small area (e.g., 30 m by 30 m) (Quuppa 2020). The challenge is that AoA systems require specific hardware (e.g., an array of antennae and a phase-detection mechanism) (Badawy et al. 2014), which is complex and costly. There are low-cost angle-based solutions such as that use RSS measurements from multiple directional antennae (Li et al. 2020b). However, for wide-area applications, both the angle measurement and PLAN accuracy are significantly degraded. The Bluetooth 5.1 (Bluetooth 2019) has added the direction measurement, which may change the angle-based PLAN.

Angle-and-range-based methods

Multiangulateration, a typical angle-and-range-based PLAN method, calculate the device location by using its relative direction and distance to a base station that has a known position. This approach is widely used in engineering surveying. For indoor PLAN, a solution is to localize a device by its direction to a ceiling-installed AoA base station (Quuppa 2020) and known ceiling height. This approach is reliable, and it reduces the dependence on the number of base stations. However, the cost is high when using in wide-area applications.

In general, geometrical position-fixing methods are suitable for the environments (e.g., outdoors and open indoors) that can be well modeled and parameterized. By contrast, it is more challenging to use such methods in complex indoor and urban areas due to the existence of error sources such as multipath, NLoS conditions, and human-body effects. The survey paper (Li et al. 2020a) has a detailed description of the error sources for position-fixing methods. It is difficult to alleviate the device-, signal-propagation-, and base-station-related error sources by the position-fixing technique itself. Thus, it is common to integrate with other PLAN techniques, such as DR and database matching.

Dead-reckoning techniques

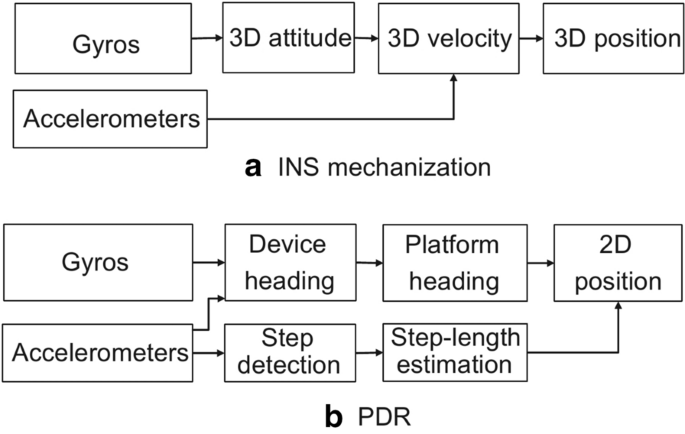

The basic principle of DR technology is to derive the current navigation state by using the previous navigation state and the angular and linear movements. The angular and linear movements can be obtained by using the measurements of sensors such as inertial sensors, cameras, magnetometers, and odometers. Among them, inertial sensors are most widely used for DR. There are two main DR algorithms based on inertial sensors: INS mechanization and PDR. The former is widely used in land-vehicle, airborne, and shipborne PLAN applications, while the latter is a common method for pedestrian navigation. Figure 6 shows the flow of the INS mechanization and PDR algorithms. INS can provide 3D navigation results, while PDR is a 2D navigation method.

The INS mechanization works on the integration of 3D angular rates and linear accelerations (Titterton et al. 2004). The gyro-measured angular rates are used to continuously track the 3D attitude between the sensor frame and the navigation frame. The obtained attitude is then utilized to transform the accelerometer-measured specific forces to the navigation frame. Afterward, the gravity vector is added to the specific force to obtain the acceleration of the device in the navigation frame. Finally, the acceleration is integrated once and twice to determine the 3D velocity and position, respectively. Therefore, the residual gyro and accelerometer biases in general cause position errors proportional to time cubed and time squared, respectively.

In contrast, the PDR algorithm (Li et al. 2017) determines the current 2D position by using the previous position and the latest heading and step length. Thus, it consists of platform-heading estimation, step detection, and step-length estimation. The platform heading is usually calculated by adding the device-platform misalignment (Pei et al. 2018) into the device heading, which can be tracked by an Attitude and Heading Reference System (AHRS) algorithm (Li et al. 2015). The steps are detected by finding periodical characteristics in accelerometer and gyro measurements (Alvarez et al. 2006), while the step length is commonly estimated by training a model that contains walking-related parameters (e.g., leg length and walking frequency) (Shin et al. 2007).

There are DR algorithms based on other types of sensors, such as visual odometry (Scaramuzza and Fraundorfer 2011) and wheel odometry (Brunker et al. 2018). Magnetometers (Gebre-Egziabher et al. 2006) are also used for heading determination.

To achieve a robust long-term DR solution, there are several challenges, including the existence of sensor errors (Li et al. 2015), the existence of the misalignment angle between device and platform (Pei et al. 2018), and the requirement for position and heading initialization. Also, the continuity of data is very important for DR. In some applications, it is necessary to interpolate, smooth, or reconstruct the data (Kim et al. 2016).

DR has become a core technique for continuous and seamless indoor/outdoor PLAN due to its self-contained characteristics and robust short-term solutions. It is strong in either complementing other PLAN techniques when they are available or bridging their signal outages and performance-degradation periods.

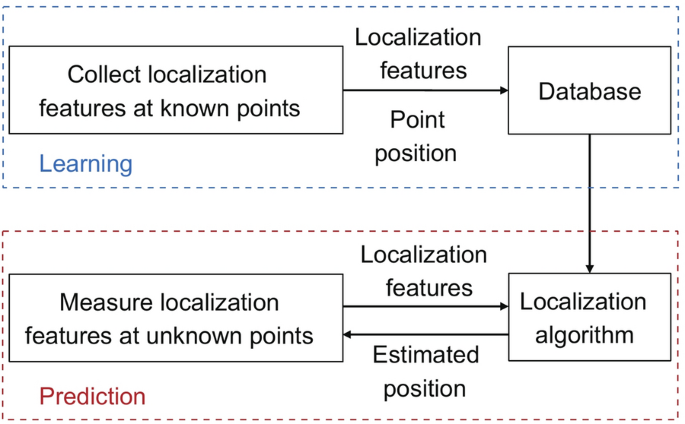

Database-matching techniques

The principle for database matching is to compute the difference between the measured fingerprints and the reference fingerprints in the database and find the closest match (Li et al. 2020a). Database-matching techniques are used to process data from various sensors, such as cameras, LiDAR, wireless sensors, and magnetometers. The database-matching process consists of the steps of feature extraction, database learning, and prediction. Figure 7 demonstrates the processes. First, valuable features are extracted from raw sensor signals. Afterward, features at multiple reference points are combined to generate a database. Finally, the real-time measured features are compared with those in the database to localize the device.

According to the dimensions of measurements and the database, database-matching algorithms can be divided into the 1D (measurement)-to-2D (database) matching, the 2D-to-2D matching, the 2D-to-3D matching, and the 3D-to-3D matching. In the 1D-to-2D matching, the real-time feature measurement can be expressed as a vector, while the database is a matrix. Such a matching approach has been used to match features such as wireless RSS (Li et al. 2017) and magnetic intensity (Li et al. 2018). Examples of the 2D-to-2D matching are the matching of real-time image features (e.g., road markers) and an image feature database (e.g., a road marker map) (Gruyer et al. 2016), and the matching of 2D LiDAR points and a grid map (de Paula Veronese et al. 2016). By contrast, the 2D-to-3D matching is a current hot spot. For example, it matches images to a 3D point cloud map (Wolcott and Eustice 2014). Finally, an example of the 3D-to-3D matching is the matching of 3D LiDAR measurements and a 3D point cloud map (Wolcott and Eustice 2017).

According to the prediction algorithm, database-matching algorithms can be divided into the deterministic (e.g., nearest neighbors (Lim et al. 2006) and Iterative Closest Point (ICP) (Chetverikov et al. 2002)) and stochastic (e.g., Gaussian distribution (Haeberlen et al. 2004), Normal Distribution Transform (NDT) (Biber and Straßer 2003), histogram (Rusu et al. 2008), and machine-learning-based) ones. Machine learning methods, such as Artificial Neural Network (ANN) (Li et al. 2019b), random forests (Guo et al. 2018), Deep Reinforcement Learning (DRL) (Li et al. 2019c), and Gaussian Process (GP) (Hähnel and Fox 2006), have also been applied.

With the rapid development of machine-learning techniques and the diversity in modern PLAN applications, database matching has been attracted even more attention than geometrical methods. The database matching methods are suitable for scenarios that are difficult to model or parameterize. On the other hand, the inconsistency between real-time measurement and the database is the main error source in database matching. Such inconsistency may be caused by the existence of new environments and varying environments and other factors. The survey paper (Li et al. 2020a) has a detailed description of the error sources for database matching.

Multi-sensor fusion

The diversity and redundancy of sensors are essential to ensure a high level of robustness and safety of the PLAN system. This is because various sensors have different functionalities. In addition to their primary functionality, each sensor has at least one secondary functionality to assist the PLAN of other sensors. Table 10 shows the primary and second functionality of different sensors in terms of PLAN.

Due to their various functionalities, different sensors provide different human-like senses. Table 11 lists PLAN sensors corresponding to different senses of the human body. The same type of human-like sensors can provide a backup or augmentation to one another. Meanwhile, the different types of human-like sensors are complementary. Thus, by fusing data from a diversity of sensors, extra robustness and safety can be achieved.

To be specific, for position-fixing and database-matching methods, the loss of signals or features lead to outages in the PLAN solution. Also, changes in the model and database parameters may degrade the PLAN performance. To mitigate these issues, DR techniques can be used (El-Sheimy and Niu 2007a, b). Moreover, the use of other techniques can enhance position-fixing through more advanced base station position estimation (Cheng et al. 2005), propagation-model estimation (Seco and Jiménez 2017), and device diversity calibration (He et al. 2018). Also, the number of base stations required can be reduced (Li et al. 2020b). On the other hand, position-fixing and database-matching techniques can provide initialization and periodical updates for DR (Shin 2005), which in turn calibrate sensors and suppress the drift of DR results.

Database matching can also be enhanced by other techniques. For example, the position-fixing method can be used to reduce the searching space of database-matching (Zhang et al. 2017b), predict the database in unvisited areas (Li et al. 2019d), and predict the uncertainty of database-matching results (Li et al. 2019e). Also, a more robust PLAN solution may be achieved by integrating position-fixing and database-matching techniques (Kodippili and Dias 2010).

From the perspective of integration mode, there are three levels of integration. The first level is loosely coupling (Shin 2005), which fuses PLAN solutions from different sensors. The second level is tightly-coupling (Gao et al. 2020), which fuses various sensor measurements to obtain a PLAN solution. The third level is ultra-tightly-coupling, which using the data or results from some sensors to enhance the performance of other sensors.

Motion constraints

Motion constraints are used to enhance PLAN solutions from the perspective of algorithms, instead of adding extra sensors. Such constraints are especially useful for low-cost PLAN systems that are not affordable for extra hardware costs. For land-based vehicles, the Non-Holonomic Constraints (NHC) can improve the heading and position accuracy significantly when the vehicle moves with enough speed (Niu et al. 2010), while the Zero velocity UPdaTe (ZUPT) and Zero Angular Rate Update (ZARU, also known as Zero Integrate Heading Rate (ZIHR)) respectively provide zero-velocity and zero-angular-rate constraints when the vehicle is quasi-static (Shin 2005). When the vehicle moves at low speed, a steering constraint can be applied (Niu et al. 2010). Moreover, there are other constraints such as the height constraint (Godha and Cannon 2007) and the four-wheel constraint (Brunker et al. 2018).

For pedestrian navigation, ZUPT (Foxlin 2005) and ZARU (Li et al. 2015) are most commonly used. Also, the NHC and step velocity constraint (Zhuang et al. 2015) have been applied. Furthermore, in indoor environments, constraints such as the corridor-direction constraint (Abdulrahim et al. 2010), the height constraint (Abdulrahim et al. 2012), and the human-activity constraint (Zhou et al. 2015) are useful to enhance the PLAN solution.

Use cases

Multi-sensor-based indoor navigation has been utilized in various applications, such as pedestrians, vehicles, robots, animals, and sports. This chapter introduces some examples. Three of our previous cases on indoor navigation are demonstrated. The used vehicle platforms include smartphones, drones, and robots.

Smartphones

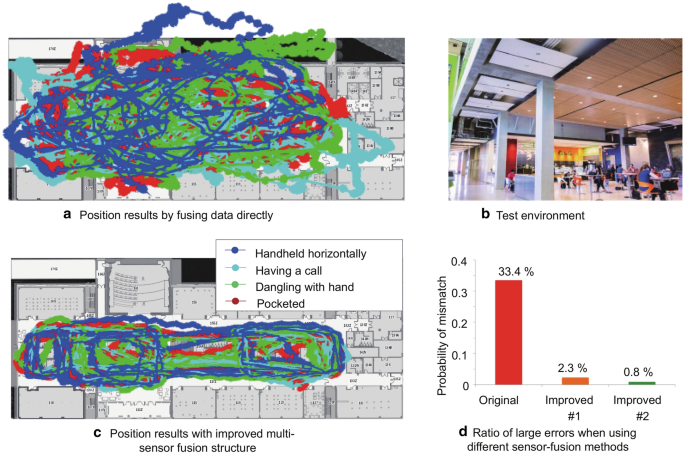

This case uses an enhanced information-fusion structure to improve smartphone navigation (Li et al. 2017). The experiment uses the built-in inertial sensors, WiFi, and magnetometers of smartphones. By combining the advantages of PDR, WiFi database matching, and magnetic matching, a multi-level quality-control mechanism is introduced. Some quality controls are presented based on the interaction of sensors. For example, wireless positioning results are used to limit the search scope for magnetic matching, to reduce both computational load and mismatch rate.

The user carried a mobile phone and navigated in a modern office building (120 m by 60 m) for nearly an hour. The smartphone has experienced multiple motion modes, including handheld horizontally, dangling with hand, making a call, and in a trouser pocket.

The position results are demonstrated in Fig. 8. When directly fusing the data from PDR, WiFi, and magnetic in a Kalman filter, the results suffer from large position errors. The ratio of large position errors (greater than 15 m) reached 33.4%. Such a solution is not reliable enough for user navigation. By using the improved multi-source fusion, the ratio of large errors was reduced to 0.8%. This use case indicates the importance of sensor interaction and robust multi-sensor fusion.

Inertial/ WiFi/ magnetic integrated smartphone navigation results (modified on the results reported in Li et al. (2017))

Drones

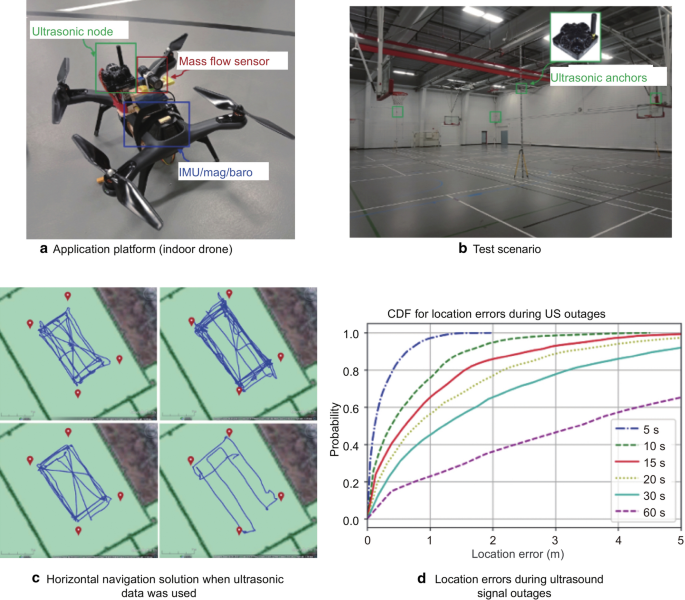

This use case integrated a low-cost IMU, a barometer, a mass-flow sensor, and ultrasonic sensors for indoor drone navigation (Li et al. 2019a). The forward velocity from the mass flow sensor and the lateral and vertical NHC can be utilized for 3D velocity updates.

Figure 9 shows the test scenario and selected results. Indoor flight tests were conducted in a 20 m by 20 m area with a quadrotor drone, which was equipped with an InvenSense MPU6000 IMU, a Honeywell HMC 5983 magnetometer triad, a TE MS5611 barometer, a Sensirion SFM3000 mass-flow sensor, and a Marvelmind ultrasonic beacon. Additionally, four ultrasonic beacons were installed on four static leveling pillars, with a height of 4 m.

INS/Barometer/Mass-flow/Ultrasonic integrated navigation (modified on the results reported in Li et al. (2019a))

When ultrasonic ranges were used, the system achieved a continuous and smooth navigation solution, with an approximate navigation accuracy of a centimeter to decimeter level. However, during ultrasonic signal outages, the accuracy was degraded to 0.2, 0.6, 1.0, 1.3, 1.8, and 4.3 m in the mean value when navigating for 5, 10, 15, 20, 30, and 60 s, respectively.

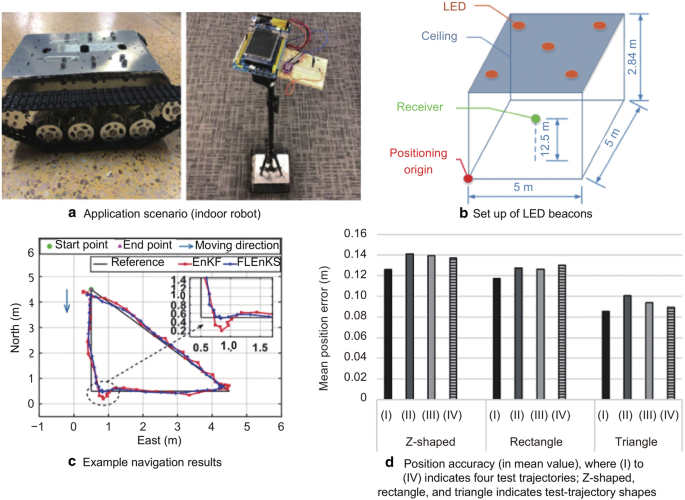

Robots

This use case integrated a photodiode and a camera indoor robot navigation (Zhuang et al. 2019). Figure 10 shows the test platform and selected results. The size of the test area was 5 m by 5 m by 2.84 m, with five CREE T6 Light-Emitting-Diodes (LEDs) mounted evenly on the ceiling as light beacons. The receiver used in the experiments contained an OPT101 photodiode and a front camera of a smartphone. The receiver was mounted on a mobile robot at a height of 1.25 m.

Photodiode/Camera integrated navigation (modified on the results reported in Zhuang et al. (2019))

Field test results showed that the proposed system provided a semi-real-time positioning solution with an average 3D positioning accuracy of 15.6 cm in dynamic tests. The accuracy is expected to be further improved when more sensors are used.

Future trends

This section summarizes the future trends for indoor PLAN, including the improvement of sensors, the use of multi-platform, multi-device, and multi-sensor information fusion, the development of self-learning algorithms and systems, the integration with 5G/ IoT/ edge computing, and the use of HD maps for indoor PLAN.

Improvement of sensors

Table 12 illustrates the future trends of sensors in terms of PLAN. Sensors such as LiDAR, RADAR, inertial sensors, GNSS, and UWB are being developed in the direction of low-cost and small-sized to facilitate their commercialization. For HD maps, reducing maintenance costs and increasing update frequency is key. The camera may further increase its physical performance such as self-cleaning, larger dynamic range, stronger low-light sensitivity, and stronger near-infrared sensitivity.

It is expected that the introduction of new wireless infrastructure features (e.g., 5G, LPWAN, WiFi HALow, WiFi RTT, Bluetooth long range, and Bluetooth direction finding) and new sensors (e.g., UWB, LiDAR, depth camera, and high-precision GNSS) in consumer devices will bring in new directions and opportunities for the PLAN society.

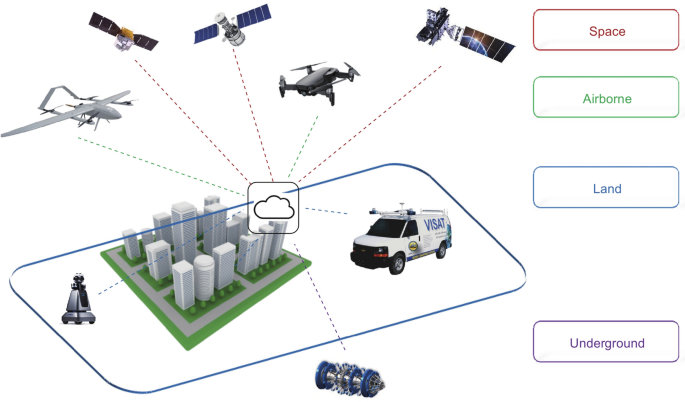

Multi-platform, multi-device, and multi-sensor information fusion

The PLAN system will develop towards the integration of multiple platforms, multiple devices, and multiple sensors. Figure 11 shows a schematic diagram of the multiple-platform integrated PLAN.

With the development of low-cost miniaturized satellites and Low Earth Orbit (LEO) satellite technologies, using LEO satellites to provide space-based navigation signal has become feasible. The research paper (Cluzel et al. 2018) uses LEO satellites to enhance the coverage of IoT signals. Also, the paper (Wang et al. 2018) analyzes the navigation signals from LEO satellites. In addition to the space-borne platform, there are airborne and underground PLAN platforms. For example, the research paper (Sallouha et al. 2018) uses unmanned aerial vehicles as base stations to enhance PLAN.

Collaborative PLAN is also a future direction. The research in (Zhang et al. 2017a) has reviewed 5G cooperative localization techniques and pointed out that cooperative localization can be an important feature for 5G networks. In the coming years, the characteristics of massive devices, dense base stations, and device-to-device communication may make accurate cooperative localization possible. In addition to multiple devices, there may be multiple devices (e.g., smartphones, smartwatches, and IoT devices) on the same human body or vehicle. The information from such devices can also be used to enhance PLAN.

Self-learning algorithms and systems

Artificial intelligence

With the popularization of IoT and location-based services, more complex and new PLAN scenarios will appear. If this is the case, self-learning PLAN algorithms and systems are needed. There are already research works that use artificial intelligence techniques in various PLAN modules, such as initialization, the switch of sensor integration mode, and the tuning of parameters. The research paper (Chen et al. 2020) uses ANN to generate PLAN solution directly from inertial sensor data, while the research work (Li et al. 2019c) uses DRL to perform wireless positioning from another perspective. In the future, there will be a massive amount of data, which meets the requirement of artificial intelligence. Meanwhile, with the further development of artificial intelligence algorithms, computing power, and communication capabilities, the integration between PLAN and artificial intelligence will become tighter.

Data crowdsourcing (e.g., co-location)

The data from numerous consumer electronics and sensor networks will make crowdsourcing (e.g., co-location) a reality. As mentioned in the HD map subsection, the crowdsourcing technique may fundamentally change the mode of map and HD map generation. Furthermore, using crowdsourced data can enhance PLAN performance. For example, the crowdsourced data contains more comprehensive information than an ego-only car in teams of map availability and sensing range. On the other hand, as pointed out in (Li et al. 2019e), how to select the most valuable data from the crowdsourced big data to update the database is still a challenge. It is difficult to evaluate the reliability of data automatically by the software in the absence of manual intervention and lack of evaluation reference.

Integration with 5G, IoT, and edge/fog computing

As described in the 5G subsection, the development of 5G and IoT technologies are changing PLAN. The new features (e.g., dense miniaturized base stations, mm-wave MIMO, and device-to-device communication) can directly enhance PLAN. Also, the combination of 5G/IoT and edge/fog computing will bring new PLAN opportunities. Edge/fog computing allows data processing as close to the source as possible, enables PLAN data processing with faster speed, reduces latency, and gives overall better outcomes. The review papers (Oteafy and Hassanein 2018) and (Shi et al. 2016) provide detailed overviews of edge computing and fog computing, respectively. Such techniques may be able to change the existing operation mode on HD maps and for PLAN. It may become possible to online repair or optimize HD maps by using SLAM and artificial intelligence technologies.

HD maps for indoor navigation

HD maps will be extended from outdoors to indoors. The cooperation among the manufacturers of cars, maps, 5G, and consumer devices have already shown its importance (Abuelsamid 2017). The high accuracy and rich information of the HD map make it a valuable indoor PLAN sensor and even a platform that links people, vehicles, and the environment. Indoor and outdoor PLAN may need different HD map elements. Therefore, different HD maps may be developed according to different scenarios. Similar to outdoors, the standardization of indoor HD maps will be important but challenging.

Conclusion

This article first reviews the market value, including the social benefits and economic values, of indoor navigation, followed by the classification from the marker perspective and the main players. Then, it compares the state-of-the-art sensors, including navigation sensors and environmental-perception (as aiding sensors for navigation), and techniques, including position-fixing, dead-reckoning, database matching, multi-sensor fusion, and motion constraints. Finally, it points out several future trends, including the improvement of sensors, the use of multi-platform, multi-device, and multi-sensor information fusion, the development of self-learning algorithms and systems, the integration with 5G/IoT/edge computing, and the use of HD maps for indoor PLAN.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed in this review article.

References

Abdulrahim, K., Hide, C., Moore, T., & Hill, C. (2010). Aiding MEMS IMU with building heading for indoor pedestrian navigation. In 2010 ubiquitous positioning indoor navigation and location based service. Helsinki: IEEE.

Abdulrahim, K., Hide, C., Moore, T., & Hill, C. (2012). Using constraints for shoe mounted indoor pedestrian navigation. Journal of Navigation, 65(1), 15–28.

Abuelsamid, S. (2017). BMW, HERE and mobileye team up to crowd-source HD maps for self-driving. https://www.forbes.com/sites/samabuelsamid/2017/02/21/bmw-here-and-mobileye-team-up-to-crowd-source-hd-maps-for-self-driving/#6f04e0577cb3. Accessed April 28, 2020.

Agency, E. G. (2019). Report on road user needs and requirements. https://www.gsc-europa.eu/sites/default/files/sites/all/files/Report_on_User_Needs_and_Requirements_Road.pdf. Accessed April 28, 2020.

Alvarez, D., González, R. C., López, A., & Alvarez, J. C. (2006). Comparison of step length estimators from weareable accelerometer devices. Annual international conference of the IEEE engineering in medicine and biology (pp. 5964–5967). IEEE: New York.

Andrews, J. G., Buzzi, S., Choi, W., Hanly, S. V., Lozano, A., Soong, A. C. K., & Zhang, J. C. (2014). What will 5G be? IEEE Journal on Selected Areas in Communications, 32(6), 1065–1082.

Badawy, A., Khattab, T., Trinchero, D., Fouly, T. E., & Mohamed, A. (2014). A simple AoA estimation scheme. arXiv:1409.5744.

Bai, L., Peng, C. Y., & Biswas, S. (2008). Association of DOA estimation from two ULAs. IEEE Transactions on Instrumentation and Measurement, 57(6), 1094–1101. https://doi.org/10.1109/TIM.2007.915122.

Basnayake, C., Williams, T., Alves, P., & Lachapelle, G. J. G. W. (2010). Can GNSS Drive V2X? GPS World, 21(10), 35–43.

Biber, P., & Straßer, W. (2003). The normal distributions transform: A new approach to laser scan matching. Proceedings 2003 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 2743–2748). IEEE: Las Vegas, NV.

Bluetooth. (2017). Exploring Bluetooth 5—going the distance. https://www.bluetooth.com/blog/exploring-bluetooth-5-going-the-distance/. Accessed April 28, 2020.

Bluetooth. (2019). Bluetooth 5.1 Direction finding. https://www.bluetooth.com/wp-content/uploads/2019/05/BTAsia/1145-NORDIC-Bluetooth-Asia-2019Bluetooth-5.1-Direction-Finding-Theory-and-Practice-v0.pdf. Accessed April 28, 2020.

Brossard, M., Barrau, A., & Bonnabel, S. (2020). AI-IMU dead-reckoning. IEEE Transactions on Intelligent Vehicles, 5(4), 585–595. https://doi.org/10.1109/TIV.2020.2980758.

Brunker, A., Wohlgemuth, T., Frey, M., & Gauterin, F. (2018). Odometry 2.0: A slip-adaptive EIF-based four-wheel-odometry model for parking. IEEE Transactions on Intelligent Vehicles, 4(1), 114–126.

Bshara, M., Orguner, U., Gustafsson, F., & Van Biesen, L. (2011). Robust tracking in cellular networks using HMM filters and cell-ID measurements. IEEE Transactions on Vehicular Technology, 60(3), 1016–1024.

Cadena, C., Carlone, L., Carrillo, H., Latif, Y., Scaramuzza, D., Neira, J., et al. (2016). Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Transactions on Robotics, 32(6), 1309–1332.

Chen, C., Zhao, P., Lu, C. X., Wang, W., Markham, A., & Trigoni, A. (2020). Deep-learning-based pedestrian inertial navigation: Methods, data set, and on-device inference. IEEE Internet of Things Journal, 7(5), 4431–4441.

Cheng, Y. C., Chawathe, Y., Lamarca, A., & Krumm, J. (2005). Accuracy characterization for metropolitan-scale Wi-Fi localization. In Proceedings of the 3rd international conference on mobile systems, applications, and services, MobiSys 2005 (pp. 233–245). Seattle, WA: IEEE.

Chetverikov, D., Svirko, D., Stepanov, D., & Krsek, P. (2002). The trimmed iterative closest point algorithm. Object recognition supported by user interaction for service robots (pp. 545–548). IEEE: Quebec City, QC.

Ciurana, M., Barcelo-Arroyo, F., & Izquierdo, F. (2007). A ranging system with IEEE 802.11 data frames. In 2007 IEEE radio and wireless symposium (pp. 133–136). Long Beach, CA: IEEE.

Cluzel, S., Franck, L., Radzik, J., Cazalens, S., Dervin, M., Baudoin, C., & Dragomirescu, D. (2018). 3GPP NB-IOT coverage extension using LEO satellites. IEEE Vehicular Technology Conference (pp. 1–5). IEEE: Porto.

de Paula Veronese, L., Guivant, J., Cheein, F. A. A., Oliveira-Santos, T., Mutz, F., de Aguiar, E., et al. (2016). A light-weight yet accurate localization system for autonomous cars in large-scale and complex environments. 2016 IEEE 19th international conference on intelligent transportation systems (ITSC) (pp. 520–525). IEEE: Rio de Janeiro.

Decawave. (2020). DWM1000 Module. https://www.decawave.com/product/dwm1000-module/. Accessed April 28, 2020.

del Peral-Rosado, J. A., Raulefs, R., López-Salcedo, J. A., & Seco-Granados, G. (2017). Survey of cellular mobile radio localization methods: From 1G to 5G. IEEE Communications Surveys and Tutorials, 20(2), 1124–1148.

Dodge, D. (2013). Indoor Location startups innovating Indoor Positioning. https://dondodge.typepad.com/the_next_big_thing/2013/06/indoor-location-startups-innovating-indoor-positioning.html. Accessed April 28, 2020.

El-Sheimy, N., & Niu, X. (2007a). The promise of MEMS to the navigation community. Inside GNSS, 2(2), 46–56.

El-Sheimy, N., & Niu, X. (2007b). The promise of MEMS to the navigation community. Inside GNSS, 2(2), 26–56.

El-Sheimy, N., Hou, H., & Niu, X. (2007). Analysis and modeling of inertial sensors using Allan variance. IEEE Transactions on Instrumentation and Measurement, 57(1), 140–149.

El-Sheimy, N., & Youssef, A. (2020). Inertial sensors technologies for navigation applications: State of the art and future trends. Satellite Navigation, 1(1), 2.

FCC. (2015). FCC 15–9. https://ecfsapi.fcc.gov/file/60001025925.pdf. Accessed 28 April 2020.

Foxlin, E. (2005). Pedestrian tracking with shoe-mounted inertial sensors. IEEE Computer Graphics and Applications, 25(6), 38–46.

Gao, Z., Ge, M., Li, Y., Pan, Y., Chen, Q., & Zhang, H. (2020). Modeling of multi-sensor tightly aided BDS triple-frequency precise point positioning and initial assessments. Information Fusion, 55, 184–198.

Gebre-Egziabher, D., Elkaim, G. H., David Powell, J., & Parkinson, B. W. (2006). Calibration of strapdown magnetometers in magnetic field domain. Journal of Aerospace Engineering, 19(2), 87–102.

Glennie, C., & Lichti, D. D. J. R. S. (2010). Static calibration and analysis of the Velodyne HDL-64E S2 for high accuracy mobile scanning. Remote Sensing, 2(6), 1610–1624.

Godha, S., & Cannon, M. E. (2007). GPS/MEMS INS integrated system for navigation in urban areas. GPS Solutions, 11(3), 193–203.

Goldstein. (2019). Global Indoor Positioning and Indoor Navigation (IPIN) Market Outlook, 2024. https://www.goldsteinresearch.com/report/global-indoor-positioning-and-indoor-navigation-ipin-market-outlook-2024-global-opportunity-and-demand-analysis-market-forecast-2016-2024. Accessed April 28, 2020.

Gruyer, D., Belaroussi, R., & Revilloud, M. (2016). Accurate lateral positioning from map data and road marking detection. Expert Systems with Applications, 43, 1–8.

Guo, X., Ansari, N., Li, L., & Li, H. (2018). Indoor localization by fusing a group of fingerprints based on random forests. IEEE Internet of Things Journal, 5(6), 4686–4698.

Guvenc, I., & Chong, C. C. (2009). A survey on TOA based wireless localization and NLOS mitigation techniques. IEEE Communications Surveys and Tutorials, 11(3), 107–124.

Haeberlen, A., Flannery, E., Ladd, A. M., Rudys, A., Wallach, D. S., & Kavraki, L.E. (2004). Practical robust localization over large-scale 802.11 wireless networks. In Proceedings of the 10th annual international conference on Mobile computing and networking (pp. 70–84). Philadelphia, PA: IEEE.

Hähnel, B. F. D., & Fox, D. (2006). Gaussian processes for signal strength-based location estimation. In Proceeding of robotics: Science and systems. Philadelphia, PA: IEEE.

Halperin, D., Hu, W., Sheth, A., & Wetherall, D. (2011). Tool release: Gathering 802.11 n traces with channel state information. ACM SIGCOMM Computer Communication Review, 41(1), 53–53.

He, S., Chan, S. H. G., Yu, L., & Liu, N. (2018). SLAC: Calibration-free pedometer-fingerprint fusion for indoor localization. IEEE Transactions on Mobile Computing, 17(5), 1176–1189.

Ibisch, A., Stümper, S., Altinger, H., Neuhausen, M., Tschentscher, M., Schlipsing, M., Salinen, J., & Knoll, A. (2013). Towards autonomous driving in a parking garage: Vehicle localization and tracking using environment-embedded lidar sensors. In 2013 IEEE intelligent vehicles symposium (IV) (pp. 829–834). Gold Coast: IEEE.

Ibisch, A., Houben, S., Michael, M., Kesten, R., & Schuller, F. (2015). Arbitrary object localization and tracking via multiple-camera surveillance system embedded in a parking garage. In Video surveillance and transportation imaging applications 2015 (pp. 94070G). San Francisco, CA: International Society for Optics and Photonics.

IEEE. (2020). IEEE 802.11TM Wireless Local Area Network. http://www.ieee802.org/11/. Accessed 28 April 2020.

Kaune, R., Hörst, J., & Koch, W. (2011). Accuracy analysis for TDOA localization in sensor networks. 14th international conference on information fusion (pp. 1–8). IEEE: Chicago, Illinois, USA.

Kim, K. J., Agrawal, V., Gaunaurd, I., Gailey, R. S., & Bennett, C. L. (2016). Missing sample recovery for wireless inertial sensor-based human movement acquisition. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 24(11), 1191–1198. https://doi.org/10.1109/TNSRE.2016.2532121.

Kodippili, N. S., & Dias, D. (2010). Integration of fingerprinting and trilateration techniques for improved indoor localization. In 2010 7th international conference on wireless and optical communications networks. Colombo: IEEE.

Kok, M., & Solin, A. (2018). Scalable magnetic field SLAM in 3D using Gaussian process maps. 2018 21st international conference on information fusion (FUSION) (pp. 1353–1360). IEEE: Cambridge.

Langley, R. B. (1999). Dilution of precision. GPS World, 1(1), 1–5.

Leugner, S., Pelka, M., & Hellbrück, H. (2016). Comparison of wired and wireless synchronization with clock drift compensation suited for U-TDoA localization. 2016 13th workshop on positioning, navigation and communications (WPNC) (pp. 1–4). IEEE: Bremen.

Levinson, J., Montemerlo, M., & Thrun, S. (2007). Map-based precision vehicle localization in urban environments. In Robotics: Science and systems (pp. 1). Atlanta, GA: IEEE.

Levinson, J., & Thrun, S. (2010). Robust vehicle localization in urban environments using probabilistic maps. 2010 IEEE international conference on robotics and automation (pp. 4372–4378). IEEE: Anchorage, AK.

Li, X. (2006). RSS-based location estimation with unknown pathloss model. IEEE Transactions on Wireless Communications, 5(12), 3626–3633. https://doi.org/10.1109/TWC.2006.256985.

Li, Y., Georgy, J., Niu, X., Li, Q., & El-Sheimy, N. (2015). Autonomous calibration of MEMS gyros in consumer portable devices. IEEE Sensors Journal, 15(7), 4062–4072.

Li, Y., Zhuang, Y., Zhang, P., Lan, H., Niu, X., & El-Sheimy, N. (2017). An improved inertial/wifi/magnetic fusion structure for indoor navigation. Information Fusion, 34, 101–119.

Li, Y., Gao, Z., He, Z., Zhang, P., Chen, R., & El-Sheimy, N. (2018). Multi-sensor multi-floor 3D localization with robust floor detection. IEEE Access, 6, 76689–76699.

Li, Y., Zahran, S., Zhuang, Y., Gao, Z. Z., Luo, Y. R., He, Z., et al. (2019a). IMU/magnetometer/barometer/mass-flow sensor integrated indoor quadrotor UAV localization with robust velocity updates. Remote Sensing, 11(7), 838. https://doi.org/10.3390/rs11070838.

Li, Y., Gao, Z. Z., He, Z., Zhuang, Y., Radi, A., Chen, R. Z., & El-Sheimy, N. (2019b). Wireless fingerprinting uncertainty prediction based on machine learning. Sensors, 19(2), 324.

Li, Y., Hu, X., Zhuang, Y., Gao, Z., Zhang, P., & El-Sheimy, N. (2019c). Deep Reinforcement Learning (DRL): another perspective for unsupervised wireless localization. IEEE Internet of Things Journal.

Li, Y., He, Z., Zhuang, Y., Gao, Z. Z., Tsai, G. J., & Pei, L. (2019d). Robust localization through integration of crowdsourcing and machine learning. In Presented at the International conference on mobile mapping technology. Shenzhen, China.

Li, Y., He, Z., Gao, Z., Zhuang, Y., Shi, C., & El-Sheimy, N. (2019). Toward robust crowdsourcing-based localization: A fingerprinting accuracy indicator enhanced wireless/magnetic/inertial integration approach. IEEE Internet of Things Journal, 6(2), 3585–3600.

Li, Y., Zhuang, Y., Hu, X., Gao, Z. Z., Hu, J., Chen, L., He, Z., Pei, L., Chen, K. J., Wang, M. S., Niu, X. J., Chen, R. Z., Thompson, J., Ghannouchi, F., & El-Sheimy, N. (2020a). Location-Enabled IoT (LE-IoT): A survey of positioning techniques, error sources, and mitigation. IEEE Internet of Things Journal.

Li, Y., Yan, K. L., He, Z., Li, Y. Q., Gao, Z. Z., Pei, L., et al. (2020). Cost-effective localization using RSS from single wireless access point. IEEE Transactions on Instrumentation and Measurement, 69(5), 1860–1870. https://doi.org/10.1109/TIM.2019.2922752.

Lim, H., Kung, L. C., Hou, J. C., & Luo, H. (2006). Zero-configuration, robust indoor localization: Theory and experimentation. In Proceedings IEEE INFOCOM 2006. 25TH IEEE international conference on computer communications. Barcelona: IEEE.

Lin, Y., Gao, F., Qin, T., Gao, W. L., Liu, T. B., Wu, W., et al. (2018). Autonomous aerial navigation using monocular visual-inertial fusion. Journal of Field Robotics, 35(1), 23–51.

Liu, R., Wang, J., & Zhang, B. (2020). High definition map for automated driving: Overview and analysis. The Journal of Navigation, 73(2), 324–341.

MachineDesign. (2020). 5G’s Important Role in Autonomous Car Technology. https://www.machinedesign.com/mechanical-motion-systems/article/21837614/5gs-important-role-in-autonomous-car-technology. Accessed April 28, 2020.

Marvelmind. (2020). Indoor Navigation System Operating manual. https://marvelmind.com/pics/marvelmind_navigation_system_manual.pdf. Accessed April 28, 2020.

Maybeck, P. S. (1982). Stochastic models, estimation, and control. London: Academic Press.

McManus, C., Churchill, W., Napier, A., Davis, B., & Newman, P. (2013). Distraction suppression for vision-based pose estimation at city scales. 2013 IEEE international conference on robotics and automation (pp. 3762–3769). IEEE: Karlsruhe.

Mur-Artal, R., & Tardós, J. D. (2017). Orb-slam2: An open-source slam system for monocular, stereo, and RGB-d cameras. IEEE Transactions on Robotics, 33(5), 1255–1262.

NHTSA. (2017). Federal motor vehicle safety standards; V2V communications. Federal Register, 82(8), 3854–4019.

Niu, X., Zhang, H., Chiang, K. W., & El-Sheimy, N. (2010). Using land-vehicle steering constraint to improve the heading estimation of mems GPS/ins georeferencing systems. ISPRS - International Archives of the Photogrammetry, Remote Sensing Spatial Information Sciences, 38(1), 1–5.

Niu, X., Li, Y., Kuang, J., & Zhang, P. (2019). Data fusion of dual foot-mounted IMU for pedestrian navigation. IEEE Sensors Journal, 19(12), 4577–4584.

NovAtel, H. (2020). IMU-FSAS. https://docs.novatel.com/OEM7/Content/Technical_Specs_IMU/FSAS_Overview.htm. Accessed 28 April 2020.

Nvidia. (2020). DRIVE Labs: How Localization Helps Vehicles Find Their Way. https://news.developer.nvidia.com/drive-labs-how-localization-helps-vehicles-find-their-way/. Accessed April 28, 2020.

Oteafy, S. M. A., & Hassanein, H. S. (2018). IoT in the fog: A roadmap for data-centric IoT development. IEEE Communications Magazine, 56(3), 157–163.

Pei, L., Liu, D., Zou, D., Leefookchoy, R., Chen, Y., & He, Z. (2018). Optimal heading estimation based multidimensional particle filter for pedestrian indoor positioning. IEEE Access, 6, 49705–49720. https://doi.org/10.1109/ACCESS.2018.2868792.

Petovello, M. (2003). Real-time integration of a tactical-grade IMU and GPS for high-accuracy positioning and navigation. Calgary: University of Calgary.

Pivato, P., Palopoli, L., & Petri, D. (2011). Accuracy of RSS-based centroid localization algorithms in an indoor environment. IEEE Transactions on Instrumentation and Measurement, 60(10), 3451–3460.

Poggenhans, F., Salscheider, N. O., & Stiller, C. (2018). Precise localization in high-definition road maps for urban regions. 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 2167–2174). IEEE: Madrid.

Quuppa. (2020). Product and Technology. http://quuppa.com/technology/. Accessed 28 April 2020.

Radi, A., Bakalli, G., Guerrier, S., El-Sheimy, N., Sesay, A. B., & Molinari, R. (2019). A multisignal wavelet variance-based framework for inertial sensor stochastic error modeling. IEEE Transactions on Instrumentation and Measurement, 68(12), 4924–4936.

Rantakokko, J., Händel, P., Fredholm, M., & Marsten-Eklöf, F. (2010). User requirements for localization and tracking technology: A survey of mission-specific needs and constraints. 2010 international conference on indoor positioning and indoor navigation (pp. 1–9). IEEE: Zurich.

Reid, T. G. R., Houts, S. E., Cammarata, R., Mills, G., Agarwal, S., Vora, A., & Pandey, G. (2019). Localization requirements for autonomous vehicles. arXiv:1906.01061.

Restrepo, J. (2020). World radio 5G roadmap: challenges and opportunities ahead. https://www.itu.int/en/ITU-R/seminars/rrs/RRS-17-Americas/Documents/Forum/1_ITU%20Joaquin%20Restrepo.pdf. Accessed April 28, 2020.

Rusu, R. B., Blodow, N., Marton, Z. C., & Beetz, M. (2008). Aligning point cloud views using persistent feature histograms. 2008 IEEE/RSJ international conference on intelligent robots and systems (pp. 3384–3391). IEEE: Nice.

SAE-International. (2016). Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles. https://www.sae.org/standards/content/j3016_201609/. Accessed April 28, 2020.

Sallouha, H., Azari, M. M., Chiumento, A., & Pollin, S. (2018). Aerial anchors positioning for reliable RSS-based outdoor localization in urban environments. IEEE Wireless Communications Letters, 7(3), 376–379.

Scaramuzza, D., & Fraundorfer, F. (2011). Visual odometry [tutorial]. IEEE Robotics and Automation Magazine, 18(4), 80–92.

Schneider, O. (2010). Requirements for positioning and navigation in underground constructions. International conference on indoor positioning and indoor navigation (pp. 1–4). IEEE: Zurich.

Schönenberger. (2019). The automotive digital transformation and the economic impacts of existing data access model. https://www.fiaregion1.com/wp-content/uploads/2019/03/The-Automotive-Digital-Transformation_Full-study.pdf. Accessed April 28, 2020.

Seco, F., & Jiménez, A. R. (2017). Autocalibration of a wireless positioning network with a FastSLAM algorithm. 2017 international conference on indoor positioning and indoor navigation (pp. 1–8). IEEE: Sapporo.

Seif, H. G., & Hu, X. (2016). Autonomous driving in the iCity—HD maps as a key challenge of the automotive industry. Engineering, 2(2), 159–162.

Shi, W., Cao, J., Zhang, Q., Li, Y., & Xu, L. (2016). Edge computing: Vision and challenges. IEEE Internet of Things Journal, 3(5), 637–646.

Shin, E. H. (2005). Estimation techniques for low-cost inertial navigation. Calgary: University of Calgary.

Shin, S.H., Park, C.G., Kim, J.W., Hong, H.S., & Lee, J.M. (2007). Adaptive step length estimation algorithm using low-cost MEMS inertial sensors. In Proceedings of the 2007 IEEE sensors applications symposium. San Diego, CA: IEEE.

Singh, S. (2015). Critical reasons for crashes investigated in the national motor vehicle crash causation survey. https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/812115. Accessed April 28, 2020.

Stephenson, S. (2016). Automotive applications of high precision GNSS. Nottingham: University of Nottingham.

Synced. (2018). The Golden Age of HD Mapping for Autonomous Driving. https://medium.com/syncedreview/the-golden-age-of-hd-mapping-for-autonomous-driving-b2a2ec4c11d. Accessed April 28, 2020.

TDK-InvenSense. (2020). MPU-9250 Nine-Axis (Gyro + Accelerometer + Compass) MEMS MotionTracking™ Device. https://invensense.tdk.com/products/motion-tracking/9-axis/mpu-9250/. Accessed April 28, 2020.

Tesla. (2020). Autopilot. https://www.tesla.com/autopilot. Accessed April 28, 2020.

Tiemann, J., Schweikowski, F., & Wietfeld, C. (2015). Design of an UWB indoor-positioning system for UAV navigation in GNSS-denied environments. 2015 international conference on indoor positioning and indoor navigation (IPIN) (pp. 1–7). IEEE: Calgary.

Titterton, D., Weston, J.L., & Weston, J. (2004). Strapdown inertial navigation technology. IET.

TomTom. (2020). Extending the vision of automated vehicles with HD Maps and ADASIS. http://download.tomtom.com/open/banners/Elektrobit_TomTom_whitepaper.pdf. Accessed April 28, 2020.

Trimble. (2020). Trimble RTX. https://positioningservices.trimble.com/services/rtx/?gclid=CjwKCAjwnIr1BRAWEiwA6GpwNY78s-u6pUzELeIu_elfoumO63LmR2QHf72Q9pM-L-NXyJjomWCX6BoCE5YQAvD_BwE. Accessed April 28, 2020.

Vasisht, D., Kumar, S., & Katabi, D. (2016). Decimeter-level localization with a single WiFi access point. 13th USENIX symposium on networked systems design and implementation (pp. 165–178). USENIX Association: Santa Clara.

Velodyne. (2020). HDL-64E High Definition Real-Time 3D Lidar. https://velodynelidar.com/products/hdl-64e/. Accessed April 28, 2020.

Wang, L., Chen, R. Z., Li, D. R., Zhang, G., Shen, X., Yu, B. G., et al. (2018). Initial assessment of the LEO based navigation signal augmentation system from Luojia-1A satellite. Sensors (Switzerland), 18(11), 3919.

Wang, Y., & Ho, K. J. I. T. O. W. C. (2015). An asymptotically efficient estimator in closed-form for 3-D AOA localization using a sensor network. IEEE Transactions on Wireless Communications, 14(12), 6524–6535.

Wang, Y. T., Li, J., Zheng, R., & Zhao, D. (2017). ARABIS: An Asynchronous acoustic indoor positioning system for mobile devices. 2017 international conference on indoor positioning and indoor navigation (pp. 1–8). IEEE: Sapporo.

WiFi-Alliance. (2020). Wi-Fi HaLow low power, long range Wi-Fi. https://www.wi-fi.org/discover-wi-fi/wi-fi-halow. Accessed April 28, 2020.

Will, H., Hillebrandt, T., Yuan, Y., Yubin, Z., & Kyas, M. (2012). The membership degree min-max localization algorithm. 2012 ubiquitous positioning, indoor navigation, and location based service (UPINLBS) (pp. 1–10). IEEE: Helsinki.

Witrisal, K., Meissner, P., Leitinger, E., Shen, Y., Gustafson, C., Tufvesson, F., et al. (2016). High-accuracy localization for assisted living: 5G systems will turn multipath channels from foe to friend. IEEE Signal Processing Magazine, 33(2), 59–70.

Wolcott, R. W., & Eustice, R. M. (2014). Visual localization within lidar maps for automated urban driving. 2014 IEEE/RSJ international conference on intelligent robots and systems (pp. 176–183). IEEE: Chicago, IL.

Wolcott, R. W., & Eustice, R. M. (2017). Robust LIDAR localization using multiresolution Gaussian mixture maps for autonomous driving. The International Journal of Robotics Research, 36(3), 292–319.

Zhang, J., Han, G., Sun, N., & Shu, L. (2017). Path-loss-based fingerprint localization approach for location-based services in indoor environments. IEEE Access, 5, 13756–13769.

Zhang, P., Lu, J., Wang, Y., & Wang, Q. (2017). Cooperative localization in 5G networks: A survey. ICT Express, 3(1), 27–32.

Zhou, B., Li, Q., Mao, Q., Tu, W., & Zhang, X. (2015). Activity sequence-based indoor pedestrian localization using smartphones. IEEE Transactions on Human-Machine Systems, 45(5), 562–574.

Zhuang, Y., Lan, H., Li, Y., & El-Sheimy, N. (2015). PDR/INS/WiFi integration based on handheld devices for indoor pedestrian navigation. Micromachines, 6(6), 793–812.

Zhuang, Y., Yang, J., Li, Y., Qi, L., & El-Sheimy, N. (2016). Smartphone-based indoor localization with bluetooth low energy beacons. Sensors, 16(5), 596.

Zhuang, Y., Wang, Q., Li, Y., Gao, Z. Z., Zhou, B. P., Qi, L. N., et al. (2019). The integration of photodiode and camera for visible light positioning by using fixed-lag ensemble Kalman smoother. Remote Sensing, 11(11), 1387.

Funding

This work was supported by Canada Research Chairs programs (Grant No. RT691875).

Author information

Authors and Affiliations

Contributions

NE devised the article structure and general contents and structure and writing parts of the manuscript. YL assisted in summarizing and writing the manuscript. Both authors have read and approved the final manuscript.

Authors' information

Naser El-Sheimy is a Professor at the Department of Geomatics Engineering, the University of Calgary. He is a Fellow of the Canadian Academy of Engineering and the US Institute of Navigation and a Tier-I Canada Research Chair in Geomatics Multi-sensor Systems. His research expertise includes Geomatics multi-sensor systems, GPS/INS integration, and mobile mapping systems. He is also the founder and CEO of Profound Positioning Inc. He published two books, 6 book chapters, and over 450 papers in academic journals, conference and workshop proceedings, in which he has received over 30 paper awards. He supervised and graduated over 60 Masters and Ph.D. students. He is the recipient of many national and international awards including the ASTech “Leadership in Alberta Technology” Award, and the Association of Professional Engineers, Geologists, and Geophysicists of Alberta (APEGGA) Educational Excellence Award.